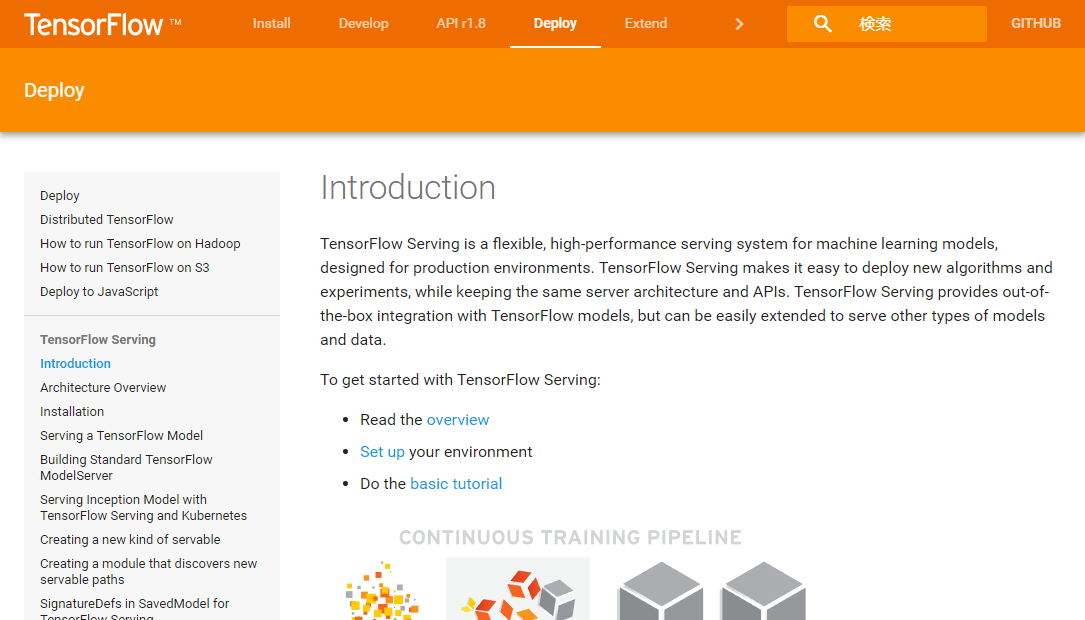

How to deploy Machine Learning models with TensorFlow. Part 2— containerize it! | by Vitaly Bezgachev | Towards Data Science

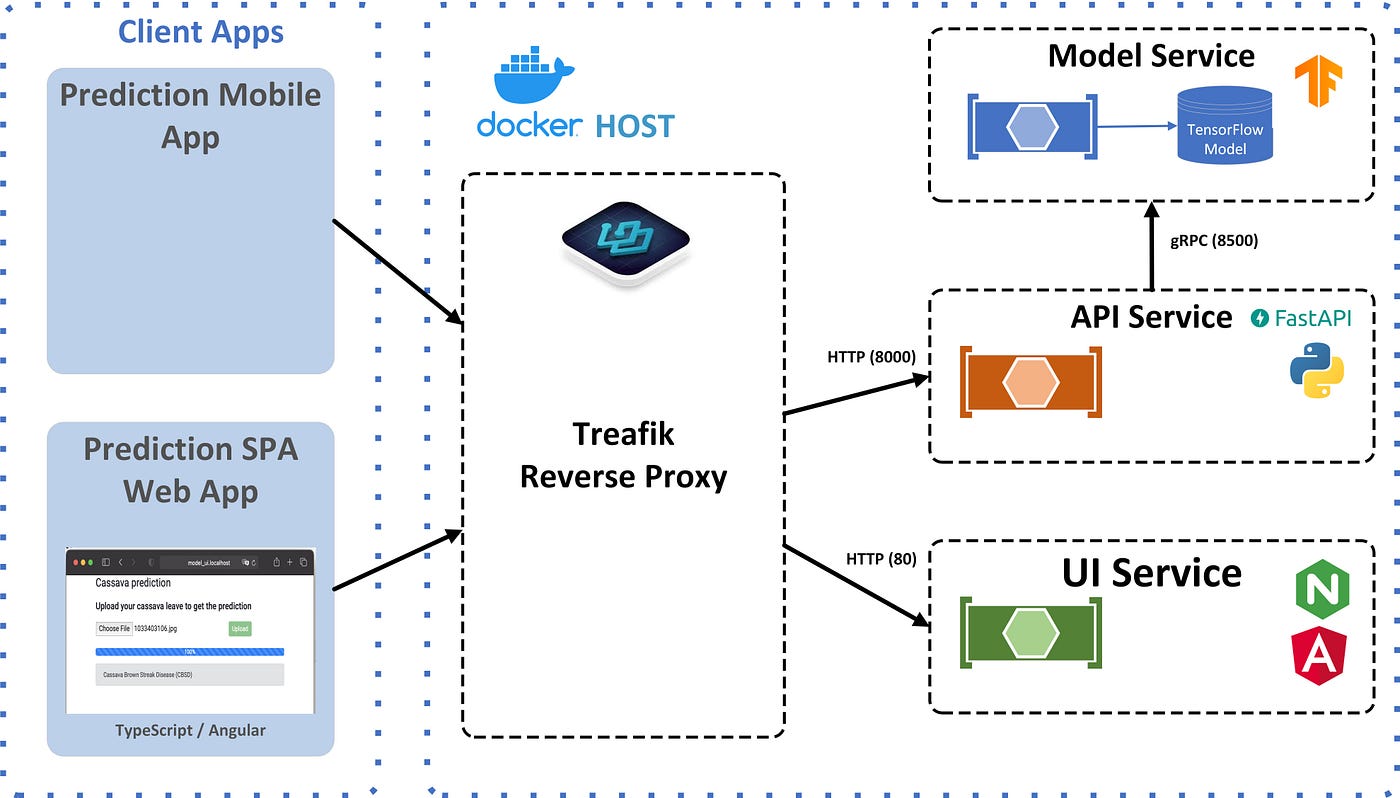

How To Deploy Your TensorFlow Model in a Production Environment | by Patrick Kalkman | Better Programming

Compiling 1.8.0 version with GPU support based on nvidia/cuda:9.0-cudnn7-devel-ubuntu16.04 · Issue #952 · tensorflow/serving · GitHub

TF Serving -Auto Wrap your TF or Keras model & Deploy it with a production-grade GRPC Interface | by Alex Punnen | Better ML | Medium

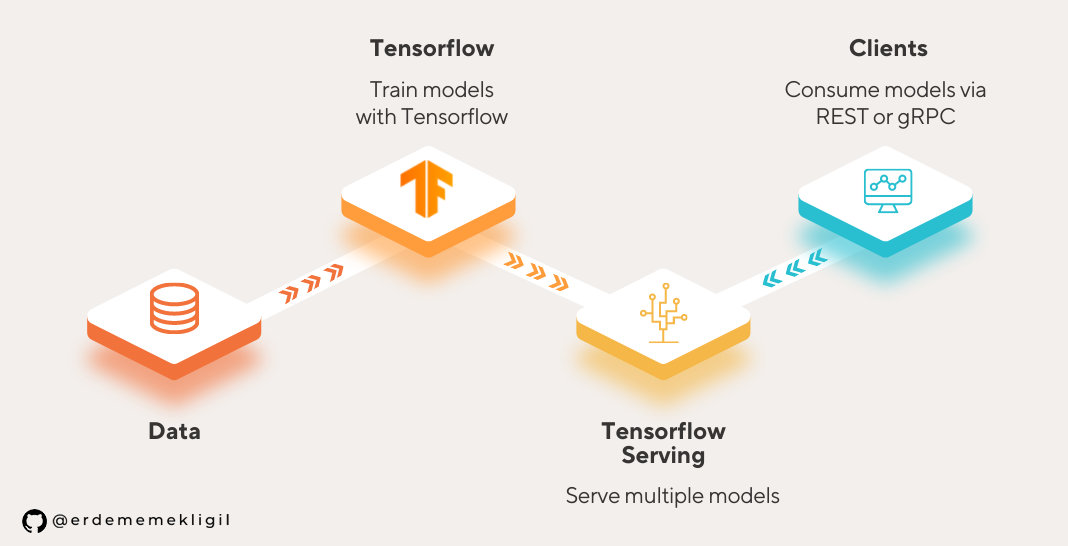

Serving an Image Classification Model with Tensorflow Serving | by Erdem Emekligil | Level Up Coding

GitHub - EsmeYi/tensorflow-serving-gpu: Serve a pre-trained model (Mask-RCNN, Faster-RCNN, SSD) on Tensorflow:Serving.